Elasticsearch

From https://www.elastic.co/products/elasticsearch:

Elasticsearch is a distributed, RESTful search and analytics engine capable of addressing a growing number of use cases. As the heart of the Elastic Stack, it centrally stores your data for lightning fast search, fine‑tuned relevancy, and powerful analytics that scale with ease.

Data

Indexing

Most data is associated with a data stream, which is an abstraction from traditional indices that leverages one or more backing indices to manage and represent the data within the data stream. The usage of data streams allows for greater flexibility in data management.

Data streams can be targeting during search or other operations directly, similar to how indices are targeted.

For example, a CLI-based query against Zeek connection records would look like the following:

so-elasticsearch-query logs-zeek-so/_search?q=event.dataset:conn

When this query is run against the backend data, it is actually targeting one or more backing indices, such as:

.ds-logs-zeek-so-2022-03-07.0001

.ds-logs-zeek-so-2022-03-08.0001

.ds-logs-zeek-so-2022-03-08.0002

Similarly, you can target a single backing index with the following query:

so-elasticsearch-query .ds-logs-zeek-so-2022-03-08.001/_search?q=event.dataset:conn

You can learn more about data streams at https://www.elastic.co/guide/en/elasticsearch/reference/current/data-streams.html.

Schema

Security Onion tries to adhere to the Elastic Common Schema wherever possible. Otherwise, additional fields or slight modifications to native Elastic field mappings may be found within the data.

Management

Elasticsearch indices are managed by the so-elasticsearch-indices-delete utility and ILM (https://www.elastic.co/guide/en/elasticsearch/reference/current/index-lifecycle-management.html).

so-elasticsearch-indices-delete handles size-based index deletion and ILM handles the following:

size-based index rollover

time-based index rollover

time-based content tiers

time-based index deletion

Default ILM policies are preconfigured and associated with various data streams and index templates in /opt/so/saltstack/default/salt/elasticsearch/defaults.yaml.

Querying

You can query Elasticsearch using web interfaces like Alerts, Dashboards, Hunt, and Kibana. You can also query Elasticsearch from the command line using a tool like curl. You can also use so-elasticsearch-query.

Authentication

You can authenticate to Elasticsearch using the same username and password that you use for Security Onion Console (SOC).

You can add new user accounts to both Elasticsearch and Security Onion Console (SOC) at the same time as shown in the Adding Accounts section. Please note that if you instead create accounts directly in Elastic, then those accounts will only have access to Elastic and not Security Onion Console (SOC).

Diagnostic Logging

Elasticsearch logs can be found in

/opt/so/log/elasticsearch/.Logging configuration can be found in

/opt/so/conf/elasticsearch/log4j2.properties.

Depending on what you’re looking for, you may also need to look at the Docker logs for the container:

sudo docker logs so-elasticsearch

Storage

All of the data Elasticsearch collects is stored under /nsm/elasticsearch/.

Parsing

Elasticsearch receives unparsed logs from Logstash or Elastic Agent. Elasticsearch then parses and stores those logs. Parsers are stored in /opt/so/conf/elasticsearch/ingest/. Custom ingest parsers can be placed in /opt/so/saltstack/local/salt/elasticsearch/files/ingest/. To make these changes take effect, restart Elasticsearch using so-elasticsearch-restart.

Elastic Agent may pre-parse or act on data before the data reaches Elasticsearch, altering the data stream or index to which it is written, or other characteristics such as the event dataset or other pertinent information. This configuration is maintained in the agent policy or integration configuration in Elastic Fleet.

Note

Templates

Fields are mapped to their appropriate data type using templates. When making changes for parsing, it is necessary to ensure fields are mapped to a data type to allow for indexing, which in turn allows for effective aggregation and searching in Dashboards, Hunt, and Kibana. Elasticsearch leverages both component and index templates.

Component Templates

From https://www.elastic.co/guide/en/elasticsearch/reference/current/index-templates.html:

Component templates are reusable building blocks that configure mappings, settings, and aliases. While you can use component templates to construct index templates, they aren’t directly applied to a set of indices.

Also see https://www.elastic.co/guide/en/elasticsearch/reference/current/indices-component-template.html.

Index Templates

From https://www.elastic.co/guide/en/elasticsearch/reference/current/index-templates.html:

An index template is a way to tell Elasticsearch how to configure an index when it is created. Templates are configured prior to index creation. When an index is created - either manually or through indexing a document - the template settings are used as a basis for creating the index. Index templates can contain a collection of component templates, as well as directly specify settings, mappings, and aliases.

In Security Onion, component templates are stored in /opt/so/saltstack/default/salt/elasticsearch/templates/component/.

These templates are specified to be used in the index template definitions in /opt/so/saltstack/default/salt/elasticsearch/defaults.yml.

Community ID

Configuration

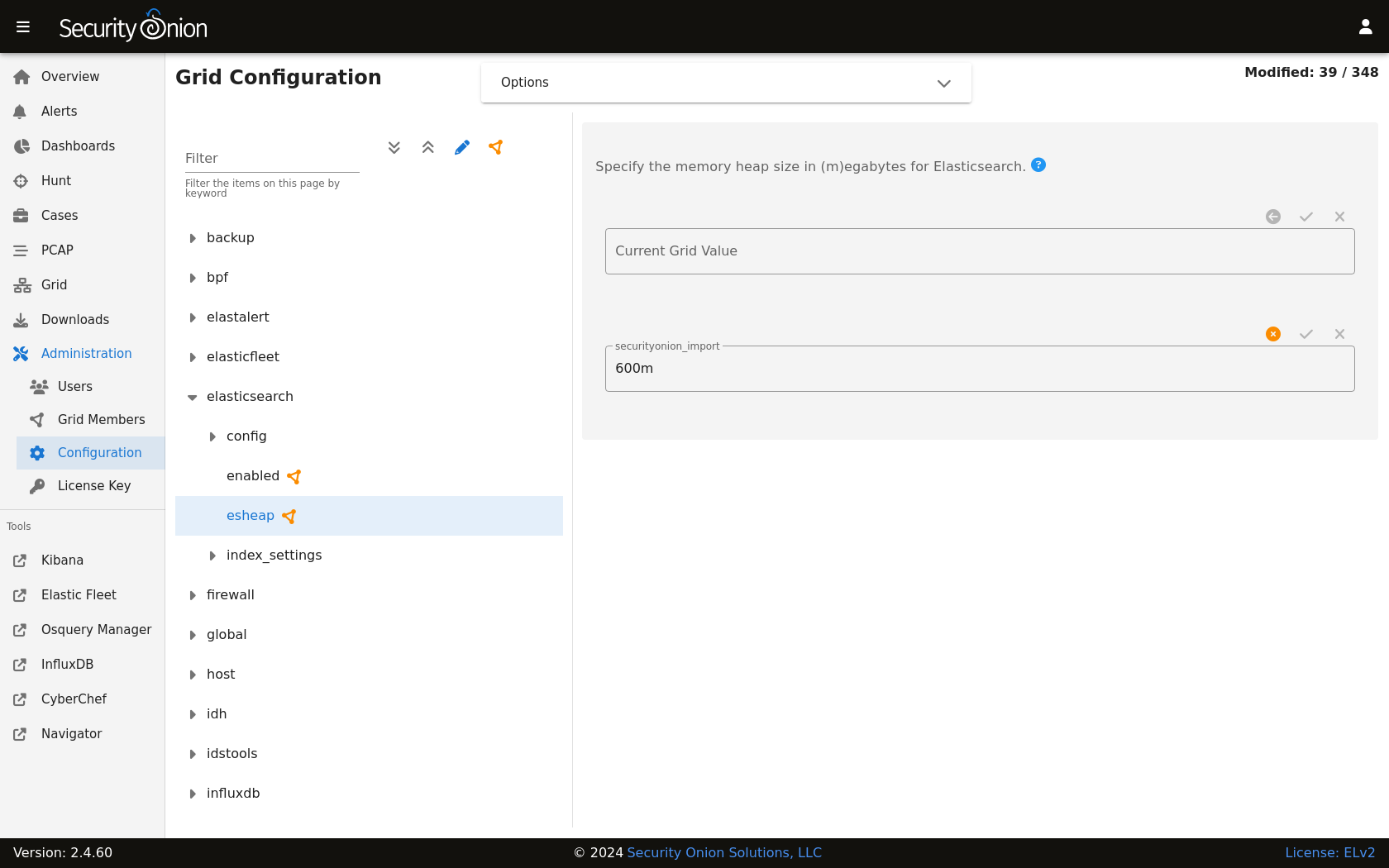

You can configure Elasticsearch by going to Administration –> Configuration –> elasticsearch.

field expansion matches too many fields

If you get errors like failed to create query: field expansion for [*] matches too many fields, limit: 3500, got: XXXX, then this usually means that you’re sending in additional logs and so you have more fields than our default max_clause_count value. To resolve this, you can go to Administration –> Configuration –> elasticsearch –> config –> indices –> query –> bool –> max_clause_count and adjust the value for any boxes running Elasticsearch in your deployment.

Heap Size

If total available memory is 8GB or greater, Setup configures the heap size to be 33% of available memory, but no greater than 25GB. You may need to adjust the value for heap size depending on your system’s performance. You can modify this by going to Administration –> Configuration –> elasticsearch –> esheap.

Field limit

Security Onion currently defaults to a field limit of 5000. If you receive error messages from Logstash, or you would simply like to increase this, you can do so by going to Administration –> Configuration –> elasticsearch –> index_settings –> so-INDEX-NAME –> index_template –> template –> settings –> index –> mapping –> total_fields –> limit.

Please note that the change to the field limit will not occur immediately, only on index creation.

Deleting Indices

Size-based Index Deletion

Size-based deletion of Elasticsearch indices occurs based on the value of cluster-wide elasticsearch.retention.retention_pct, which is derived from the total disk space available for /nsm/elasticsearch across all nodes in the Elasticsearch cluster. The default value for this setting is 50 percent.

To modify this value, first navigate to Administration -> Configuration. At the top of the page, click the Options menu and then enable the Show all configurable settings, including advanced settings. option. Then navigate to elasticsearch -> retention -> retention_pct. The change will take effect at the next 15 minute interval. If you would like to make the change immediately, you can click the SYNCHRONIZE GRID button under the Options menu at the top of the page.

If your indices are using more than retention_pct, then so-elasticsearch-indices-delete will delete old indices until disk space is back under retention_pct.

Time-based Index Deletion

Time-based deletion occurs through the use of the $data_stream.policy.phases.delete.min_age setting within the lifecycle policy tied to each index and is controlled by ILM. It is important to note that size-based deletion takes priority over time-based deletion, as disk may reach retention_pct and indices will be deleted before the min_age value is reached.

Policies can be edited within the SOC administration interface by navigating to Administration -> Configuration -> elasticsearch -> $index -> policy -> phases -> delete -> min_age. Changes will take effect when a new index is created.

Re-indexing

Re-indexing may need to occur if field data types have changed and conflicts arise. This process can be VERY time-consuming, and we only recommend this if keeping data is absolutely critical.

Clearing

If you want to clear all Elasticsearch data including documents and indices, you can run the so-elastic-clear command.

GeoIP

Elasticsearch 8 no longer includes GeoIP databases by default. We include GeoIP databases for Elasticsearch so that all users will have GeoIP functionality. If your search nodes have Internet access and can reach geoip.elastic.co and storage.googleapis.com, then you can opt-in to database updates if you want more recent information. To do this, add the following to your Elasticsearch Salt config:

config:

ingest:

geoip:

downloader:

enabled: true

More Information

Note